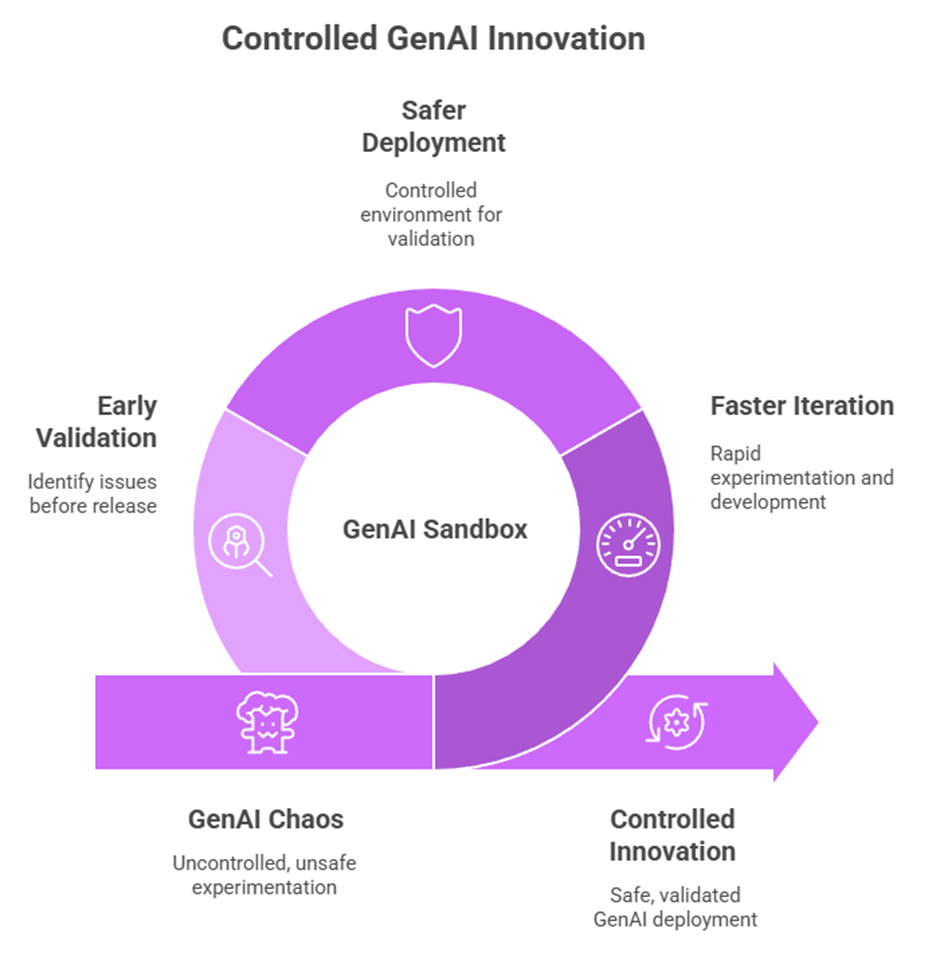

LLMs, RAG, and prompting evolve almost daily. The challenge isn’t building — it’s testing, validating, and improving safely. Enter the GenAI Sandbox: a space for faster iteration, safer deployment, and early validation.

From our experience with applications where a GenAI component plays a central role, we’ve observed that this part of the system introduces unique challenges and characteristics.

Architecture

The GenAI kernel typically includes:

- The LLM itself or an interface to it

- LLM tools (e.g., RAG)

- Prompting and orchestration (including tool selection)

This kernel generates outputs based on user input, which the broader application then processes. The rest of the application usually handles responsibilities such as process flow, database management, and the user interface.

Characteristics of the GenAI kernel

- Limited transparency: For many, the GenAI component functions as a “black box,” with little visibility into how it works or its side effects.

- High sensitivity to changes: Even minor adjustments can have significant ripple effects. For instance, resolving an unwanted side effect of a prompt in one use case may cause that same prompt to produce unintended results in another. The same applies to model versions, LLM tools, or RAG documents.

- Rapid evolution: LLMs, their versions, fine-tuning, supporting tools, and especially prompting/orchestration are evolving at a remarkable pace.

- Continuous improvement potential: As prompting and orchestration techniques mature, the overall performance of the application can steadily improve.

The need for structured testing

To enable this evolution responsibly, organizations need a robust test set and procedure, along with an environment to implement, run, and log changes safely. Mature development teams address this by integrating GenAI testing into their DTAP environments, often with sandboxing in place.

However, this represents only the best-case scenario. In practice, many organizations lack even a basic test environment with evaluators for prompts. This gap isn’t surprising: setting up such infrastructure is both costly and complex.

The concept of a GenAI sandbox

This challenge has led to the idea of a dedicated GenAI sandbox environment (potentially cloud-based). Such an environment would:

- Allow testing without full-scale development

- Use the same core components as the production GenAI solution

- Support test sets and evaluators to assess responses effectively

Because changes to the GenAI kernel are expected to occur far more frequently than changes to the rest of the application, such a sandbox would enable continuous and safe improvements to prompts and orchestration.

With this approach, any adjustment to the GenAI kernel could be tested quickly. If a DTAP pipeline exists, changes would still progress through it. But even without a complete DTAP setup, this sandbox would already mitigate much of the risk associated with frequent modifications.

Why not just test with my preferred LLM and chatbot?

Of course, it is possible to test ideas directly with a preferred LLM or chatbot. However, this approach has significant limitations:

- If your application relies on RAG with proprietary documents, you may need to replicate that setup in testing, which is not typically supported by standard chatbots.

- If you want to compare multiple LLMs from different vendors, this is difficult to achieve through a single chatbot interface.

- Many important parameters and configurations—such as temperature, top-k, context handling, or tool orchestration—are not accessible in consumer-facing chatbots.

- Testing in isolation does not reflect the end-to-end behavior of the application, where outputs are processed, logged, and evaluated as part of a larger workflow.

In short, while a chatbot can provide quick insights, it does not provide the controlled, repeatable, and comprehensive environment needed for professional application testing. A GenAI sandbox bridges this gap by replicating the actual architecture and enabling systematic evaluation.

Additional use case: early-stage validation

A GenAI sandbox could also add value at the ideation stage of a project. Without building the full solution, teams could experiment with and validate the critical GenAI components of a future application. At this early stage, regulators and stakeholders could already review and assess whether the AI kernel is capable of delivering on the design’s intent

Laiyertech has developed an AI software platform that is also applied at organizations as a GenAI sandbox. This sandbox can be deployed on our cloud, the organization’s cloud, or in on-premises environments, and is available under a shared source license.

Our approach is to work collaboratively with your in-house software development team(s) or with your preferred IT vendors to realize an optimal AI application for the organization.

Leave a Reply

You must be logged in to post a comment.