Over the last year and a half, I have redefined how I code. Having spent many years building large-scale systems, I knew my process well, but the arrival of AI changed the process itself. What once was structured programming has become what is now called vibe coding, shaping intent, context, and tone through dialogue with AI. By vibe coding I mean guiding AI-generated development through direction and review rather than handing over the work entirely. It is a disciplined way to design and express solutions in language instead of syntax. The shift was not spontaneous. It was a deliberate, methodical exploration of what responsible AI adoption can look like in practice.

At first, I used AI only for review. That was safe territory: transparent, verifiable, and reversible. I could assess what it produced and identify the boundaries of its usefulness. Early on, it revealed a pattern. Its technical knowledge often lagged behind current practice. It showed its limitations: accurate in parts, but sometimes anchored in older methods. For organizations, the same applies. AI adoption should begin with understanding where the system’s knowledge ends and where your own responsibility begins.

Gradually, I extended its scope from isolated snippets to more complex functions. Each step was deliberate, guided by process and review. What emerged was less a matter of delegation than of alignment. I realized that my values as a developer, such as maintainability, testing, and clear deployment practices, are not negotiable. They form the ethical infrastructure of my work. AI should never replace these foundations but help protect them. The same holds true for organizations. Core values are not obstacles to progress; they are the conditions that make progress sustainable.

Metaphors are always risky, and I am aware of that. They can simplify too much. Yet they help clarify what is often hard to explain. My work with AI feels similar to how an organization integrates a new team member. LLMs are not deterministic. They hallucinate, carry biases, and their knowledge is bounded by training data. But then, are humans any different? People join with preconceptions, partial knowledge, and habits shaped by their past. We do not simply unleash them into production. We mentor, guide, monitor, and integrate them. Over time, trust builds through supervised autonomy. The process of bringing AI into a workflow should be no different.

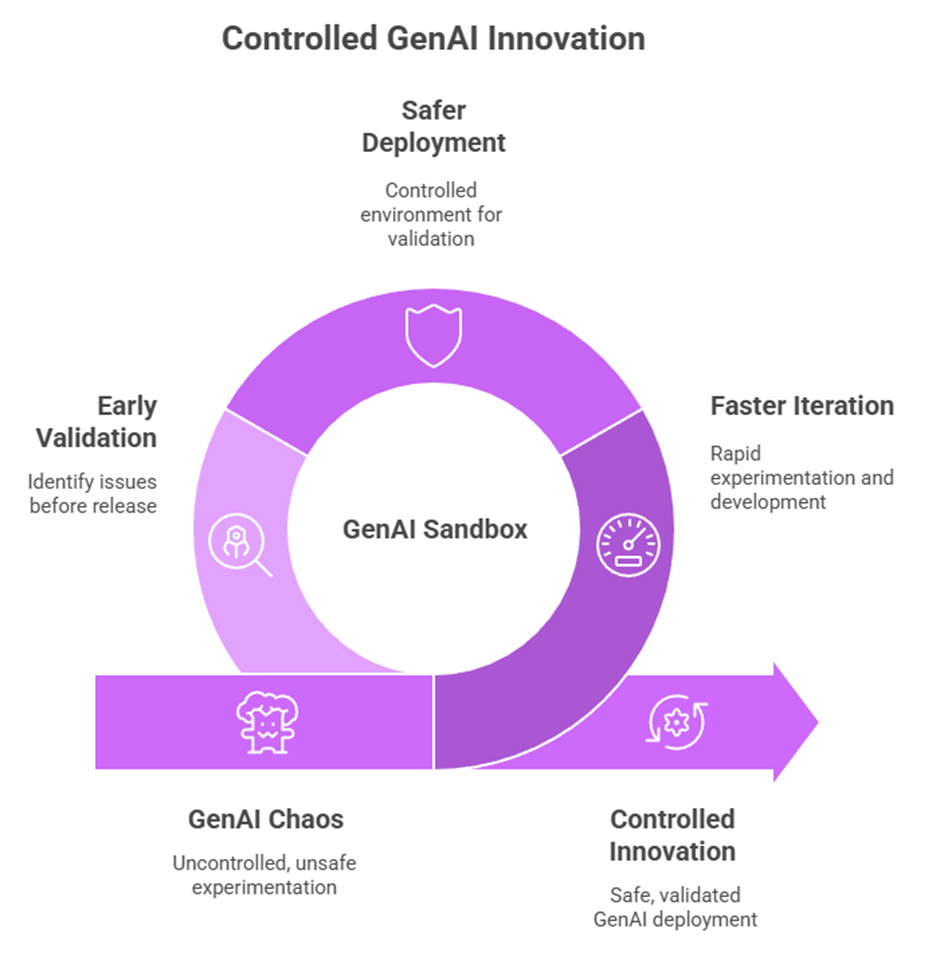

In both cases, human or machine, responsible adoption is a process of mutual adaptation. The AI learns from context and feedback, and we learn to express intent more precisely and to build systems that preserve oversight. The goal is not perfect control but a continuous dialogue between capability and governance.

Responsible AI adoption is not about efficiency at any cost. It is about preserving integrity while expanding capacity. Just as I review AI-generated code, organizations must regularly review how AI affects their own reasoning, values, and culture. Responsibility does not mean hesitation. It means understanding the tool well enough to use it creatively and safely. What matters most is staying in the loop, with human judgment as the final integration step.

So my journey from code review to responsible orchestration mirrors what many organizations face today. The key lessons are consistent:

• Start small and learn deliberately.

• Protect what defines you: values, standards, and judgment.

• Build clear guardrails and governance.

• Scale only when understanding is mature.

• Stay actively in the loop.

AI, like a capable team of colleagues, can strengthen what already works and reveal what needs attention. But it must be guided, not followed. The craft of programming has not disappeared; it has moved upstream, toward design, review, and orchestration. In code, I protect my principles, and organizations should do the same. The future of work lies in mastering this dialogue: preserving what makes us human while learning how to work, decide, and lead with a new kind of intelligence.